If you’ve ever launched an AI feature and later realized it wasn’t quite ready—or struggled to define what “quality” even looks like in an AI product—this episode is for you.

In this episode of Supra Insider, Marc and Ben sit down with Aman Khan, Head of Product of Arize AI and a leading voice in AI product evaluation. Aman has helped dozens of teams—from scrappy startups to massive consumer platforms—build systematic approaches for evaluating LLM-powered features. Together, they explore the dangers of “vibe coding” your way to production, how to define and operationalize evals across different layers of your product, and why even “good” outputs can still lead to bad outcomes without proper measurement.

Whether you’re a PM under pressure to ship AI features fast, or a product leader figuring out how to instill quality and reliability into your development process, this conversation is packed with frameworks, analogies (like self-driving cars), and hard-won lessons you can use to build smarter and ship more confidently.

All episodes of the podcast are also available on Spotify, Apple and YouTube.

New to the pod? Subscribe below to get the next episode in your inbox 👇

To support the podcast, please check out the links below:

Supra has teamed up with Maven to bring you something special – courses that our own community members have personally curated. And because you're part of the Supra family, you get $100 off any of these handpicked selections with code

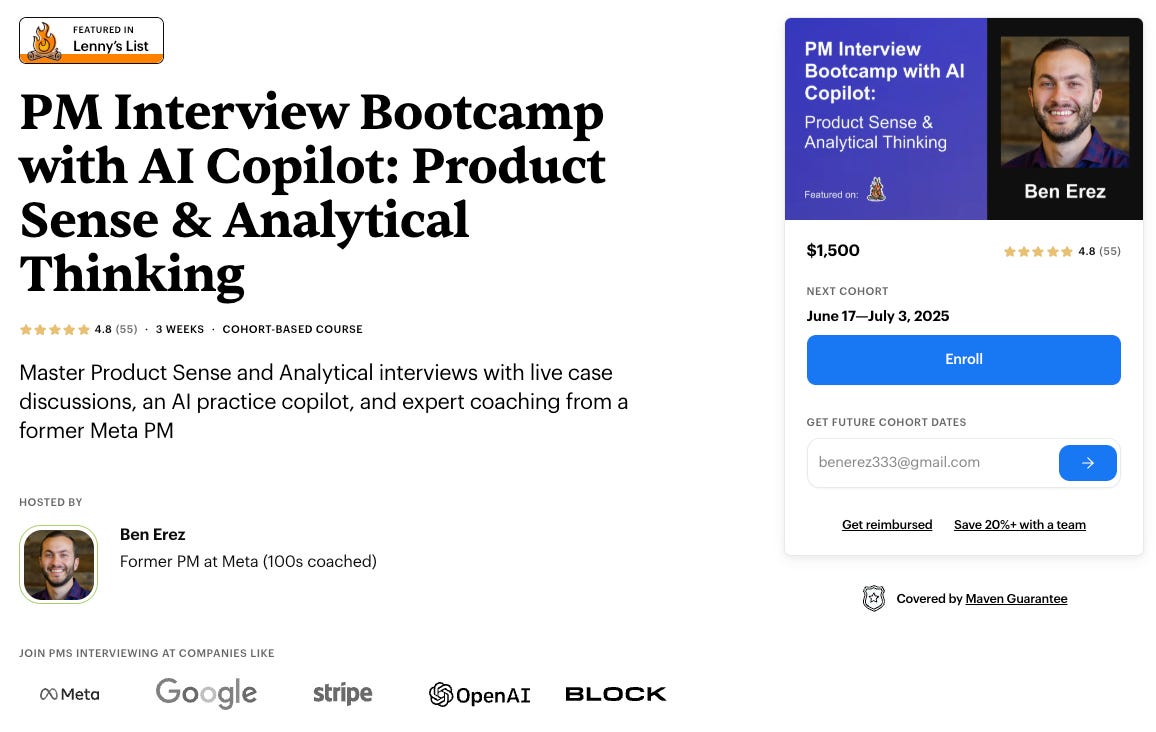

SUPRAxMAVEN. Aman’s course is part of the partnership 🚀Preparing for a PM interview with tech companies like Meta and Google? Check out Ben's course for Product Sense and Analytical Thinking PM interviews (selected by Lenny Rachitsky as a Top Course in Product on Maven 🔥) and his AI Copilot for interview practice. Supra Insider listeners get 10% off the course with promo code “suprainsider”. Enrollment is now open for the next cohort starting July 15th.

In this episode, we covered the following topics:

(00:00) The danger of launching AI features without proper evaluation—and why trust is hard to rebuild

(04:12) “Vibe coding” explained: when teams ship based on gut feel instead of structured validation

(09:07) Real-world example: a travel chatbot gets great engagement—until users start jailbreaking it

(15:36) Defining “good enough”: how to set AI quality metrics across tone, latency, hallucinations, and risk

(19:58) What PMs can learn from self-driving car evals—and how to build iterative test sets for reliability

(23:04) How LLM-as-a-judge works: using one model to grade another, and when to rely on human labels

(34:14) Spotting dangerous “false positives”: when outputs seem right but come from flawed reasoning

(42:22) What to do when your evals say “good” but the business metrics drop—and who’s responsible

And more!

If you’d like to watch the video of our conversation, you can catch that on YouTube:

Links:

Aman Khan Website: http://amank.ai

Aman Khan: https://www.linkedin.com/in/amanberkeley/

Aman Khan X: https://x.com/_amankhan

To support the podcast, please check out the links below:

Supra has teamed up with Maven to bring you something special – courses that our own community members have personally curated. And because you're part of the Supra family, you get $100 off any of these handpicked selections with code

SUPRAxMAVEN.

Our supra members in our meetups across the US. Check out Supra: https://www.joinsupra.com/

Preparing for a PM interview with tech companies like Meta and Google? Check out Ben's course for Product Sense and Analytical Thinking PM interviews (selected by Lenny Rachitsky as a Top Course in Product on Maven 🔥) and his AI Copilot for interview practice. Supra Insider listeners get 10% off the course with promo code “suprainsider”. Enrollment is now open for the next cohort starting June 17th.

If you enjoyed this conversation, please share it with a friend or colleague 👇

Also, if you’re a new subscriber, we encourage you to check out some of the recent episodes you might have missed:

And here are some less recent favorites:

Share this post